Using Azure Workload Identity on AKS

Having used Azure AD Pod Identity for the last couple of years, Microsoft have now made the replacement product - Azure AD Workload Identity (AZWI) - generally available. With the GA release of AZWI, Azure AD Pod Identity has been deprecated, and will officially stop receiving security patches in September 2023. If you're using the legacy solution in production, now would be a good time to start planning its replacement.

In this blog, we'll install Workload Identity onto AKS, have a look at the features and see how it compares to AAD Pod Identity.

Setup and install

AZWI requires that the host cluster performs the role of an OIDC issuer. In some cases this can be fairly complex to setup, however, for AKS this is as simple as enabling the OIDC Issuer option on your cluster. The good news is that this can be enabled retrospectively if you didn't build your cluster with the issuer enabled. For the rest of the section we'll assume that you've enabled the issuer and all is working.

Get the OIDC issuer URL

We'll need to pass the cluster OIDC issuer URL to the AZWI Helm deployment, so let's grab that from our AKS cluster before going any further. As per the official documentation, we can get the URL by issuing the following Azure CLI command:

Make a note of the URL, we'll need that shortly.

Install the webhook controller

AZWI uses a mutating admission webhook controller to update workloads within the cluster; we can install the controller using a Helm v3 package as shown on the official website. The only mandatory configuration parameter here is the Azure Tenant ID, so ensure you update that when running the commands below. We're assuming you have the helm command-line utility installed, and you have the current context of your cluster setup in kubectl.

There really isn't much more to the initial cluster setup, so once the Helm release has completed check that both controller replicas are running, and we're ready to move on and dive into a little bit of the theory.

The science bit

AZWI leverages the AKS OIDC service to form a bridge between Azure AD identities (applications or managed identities) and native Kubernetes service accounts within the cluster. Federated credentials exist within Azure that link the two entities together, and allow for access tokens to be provided from Azure AD to Kubernetes, permitting direct access to Azure resources via workloads using projected service account tokens.

In theory, AZWI has been developed to make life easier than it was using Pod Identity; we no longer need to setup AzureIdentity resources for our workloads and can use native ServiceAccount resources with annotations to achieve the same result. AZWI no longer requires an intermediary (i.e. Node Managed Identity pods) to intercept IMDS requests, supports both Linux and Windows workloads, and removes the bottlenecks associated with the legacy architecture. The other major plus for non-AKS or private cloud customers is that AZWI will absolutely work with any cluster, regardless of cloud provider or physical location.

Another key advantage to the new model is that we have more flexibility in how we allocated our identities. As stated on the AZWI concepts page, we can have any combination of the following:

- one-to-one (a service account referencing an AAD object) - as per the diagram above

- many-to-one (multiple service accounts referencing the same AAD object)

- one-to-many (a service account referencing multiple AAD objects by changing the client ID annotation)

In most cases, we're going to go with a one-to-one mapping, but there are definitely going to be some use cases where the other approaches would be useful.

AZWI vs Pod Identity

AZWI was originally pencilled as Azure AD Pod Identity v2 but was rebranded during development; whilst the two products have similar use cases and outcomes, the architecture of AZWI is entirely different, so the deprecation of the old solution makes a lot of sense. Since the two solutions are so different, it's difficult to map features in one solution to the other, however, let's run through the key differences.

- The

AzureIdentityandAzureIdentityBindingare no longer used to assign identity to a pod. The podServiceAccountis now annotated with the identity ID:

- With the removal of the

AzureIdentityBindingmodel, we no longer specify theaadpodidbindinglabel to pods. Now we specify access via a common label, that will instruct the admission controller to project the service tokens into the pod on startup. Note that we must also ensure that the service account linked to the identity is enforced on the pod:

- There is no longer an NMI controller to intercept Azure API traffic calls from the worker pods; token details are now dynamically projected into the pods, as shown below.

- The legacy MIC

Deploymentand NMIDaemonsetresources are replaced by a lightweight webhook controllerDeploymentresource. This architecture scales well and does not introduce host bottlenecks with a large number of IMDS consumers.

Moving from Pod Identity

One of the reasons Pod Identity was so popular was that it was widely supported by the CNCF vendors and their products. Most of the graduated applications had good, working examples of how to integrate with Pod Identity and whilst still officially in preview state, the product was stable and well understood by the community, even though it was fairly complex to setup and maintain.

As the end-of-support/end-of-life dates approach, a number of vendors have now updated their applications to support AZWI in addition to Pod Identity. Each vendor however, has a different approach, which is to be expected since it is the application itself that consumes the mounted tokens and makes upstream calls into the Azure APIs.

The best advice here is to check-in with your vendors and see where they are in terms of supporting AZWI; some will already support it (if they previously supported Pod Identity) but there will be some changes required in configuration and also in the way you provide credentials to the consuming services.

Some vendors, at time of writing, still haven't provided support for AZWI, but are looking to so do imminently now that the solution has reached GA status.

Hello, Real World

The Azure AD Workload Identity documentation has a quick start guide which will run through the basics of using the product, so there's little value in repeating that here. Instead, we'll use AZWI in conjunction with a real third party application, and walkthrough some tests to make sense of what's happening. We'll build some code that will perform the following:

- Create a user managed identity in our tenant

- Create a Key Vault, and assign permissions to the user managed identity

- Create a service account in our cluster, annotated with the user managed identity (client ID)

- Create a federated credential that references the service account and our user managed identity

- Finally, we'll deploy our application (External Secrets) and configure it to retrieve data from the vault

Naturally, we're going to do this in Terraform, to keep things simple. In production deployments, we would normally deploy the Kubernetes components using FluxCD or another GitOps tool.

Let's get started!

1. Getting ready

External Secrets is an operator that enables Kubernetes to synchronise secrets with external secret management solutions; in our example we'll integrate the product with Azure Key Vault using managed identity authentication.

Before we start, ensure that you have the following:

- You should have (at least)

ContributorandUser Access Administratoraccess to the AKS cluster and the resource group it resides in - we need to create resources and apply IAM changes to these resources - Your target AKS cluster should (as per this section):

- have the Azure AD Workload Identity operator installed

- have the OIDC Issuer endpoint enabled

Your workstation will need the following software installed for your respective operating system, if you haven't already:

2. Clone the repo

We have created a public repository that contains the Terraform source code required for the deployment. Clone or download this repository to your local workstation, and open up a prompt in your terminal of choice at the /terraform folder; this is where the Terraform code lives.

Edit the my.auto.tfvars file in a text editor, updating the aks_resource_group_name and aks_cluster_name fields to match your environment. Just to state the obvious, the resource group required here is the one the AKS resource resides in, NOT the one with the worker resources in (usually this is suffixed -nodes).

Save the file and we're ready to deploy.

3. Deploy the code

Complete the following tasks in your shell of choice.

As we're not defining and provider parameters for the azurerm provider, we need to make sure that we are logged in using the Azure CLI and that the correct target subscription has been selected:

Once we've done this, we can initialise our Terraform and apply the configuration:

Confirm the actions, and let the code deploy. This should take around 5 minutes, but could take longer depending on the sort of day Azure is having. We've all been there, I'm sure...

When complete, make a note of your key vault name, which will be provided as an output from the deployment. You'll need this when we validate our secrets later on.

Moving swiftly on, let's validate our deployment and look at how this all hangs together.

Taking a closer look

We'll be running a few kubectl commands next, so confirm that your current context is set to your target AKS cluster.

Validating the External Secrets Operator

The successful Helm deployment of the operator does not imply that it is working with our managed identity, so we need to check a few more details to validate this.

- Let's double check that the controller deployment was successful, and that we're all up to date:

- Assuming the operator installed, let's now check our

ClusterSecretStoreCRD deployment is in place, and functional:

- We're looking for a few details in the output:

- The

Provider:details should reflect the configuration we expect; using the nomincated service account and the correct Key Vault URI - The

store validatedevent should be reported by the resource; this means that External Secrets was able to connect to the vault successfully and read its contents - Finally, the

Capabilities:output should beReadWrite. We granted our identityKey Vault Administratoraccess to the underlying vault, so if required we could also push secret changes back into the vault (we won't in this example, for brevity).

- The

Checking secrets

Having confirmed that the operator and cluster stores are happy, we can check that our ExternalSecret resource was created by looking at the controller log output, and then by checking the resource itself:

Awesome! Now let's drill a bit deeper into the output on the ExternalSecret resource.

If we look at the events, you'll see that there was a short delay whilst the store validated its identity with Azure. Once this took place, the secret was udpated successfully.

Let's confirm that we can read our secret!

Secret synchronisation

The ExternalSecret provides a pointer for the controllers to create and syncrhonise a native Secret resource in our cluster. We'll confirm that the secret is in place:

- Note that the

ownerReferencesshow that theExternalSecretresource owns theSecretresource - Let's check that the secret data matches the contents of the Key Vault. You will need the name of your key vault here, which should have been output from your Terraform apply task earlier on. We're kind, so have added options for you Windows users below....

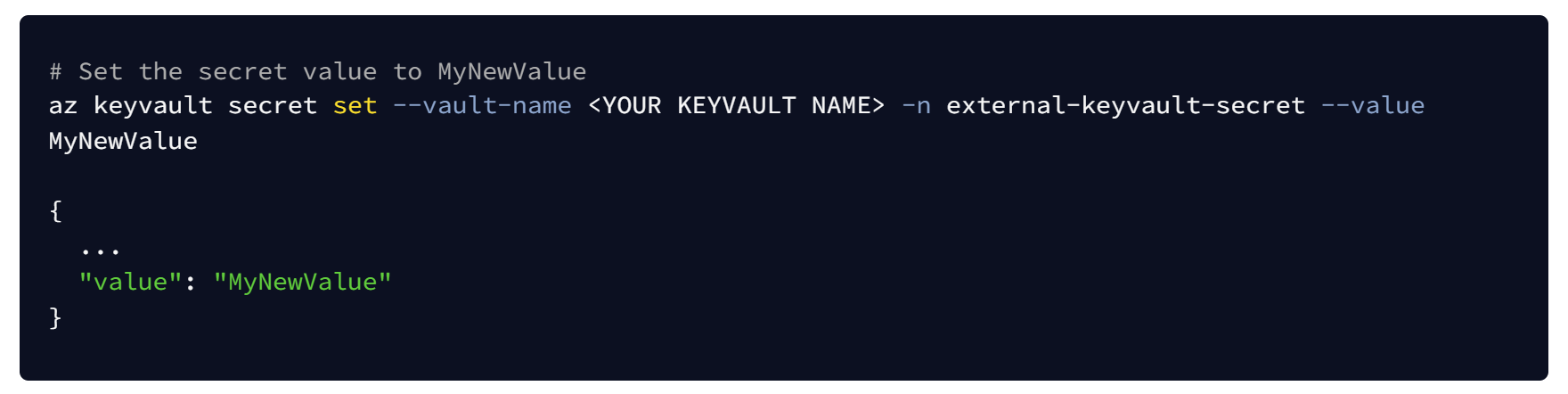

Final check coming up - we'll update the key vault value and see what happens to our Kubernetes secret:

- The

ExternalSecretresource should (within 60 seconds of the key vault changing) have triggered a synchronisation event:

- Which in turn will have updated the secret:

Success!!

Clearing up

Since we deployed everything with Terraform, cleaning up after ourselves is as simple as:

This will remove all Kubernetes resources and all Azure resources, leaving your AKS cluster in it's previous state. Almost as if nothing happened...

Closing thoughts

AZWI is a great upgrade on Pod Identity, and offers a much simplified administrative model and vastly improved scalability over its predecessor. We really like the native resource model and the portability of the solution across different Kubernetes distributions. Simplifying the controller model will certainly appeal to all those overworked SRE teams out there.

Unfortunately, this won't be a simple migration for many Pod Identity users. The differences between the two products are significant enough to prevent like-for-like replacement, and as such the move to the AZWI model is going to be largely dictated by the CNCF vendors and how quickly they can adapt their existing applications. Whilst it's possible to run both solutions concurrently in your clusters, the key issue really is whether you can fully migrate before the September 2023 deprecation of Pod Identity.